Getting your website indexed by Google is the most important step in improving online visibility. Without proper indexing, your site will not appear in any search results, and your target audience will not see all that hard work. Although Google’s crawling and indexing processes have become refined, following best practices in 2025 means your site will rank even higher and gain the needed attention.

This guide will detail the basic steps of indexing your website on Google and also describe how Google crawls and indexes your pages.

Steps to Index Your Website on Google

| Step | What It Involves | Why It’s Important |

|---|---|---|

| Submit Your Website to Google | Use Google Search Console to request indexing | Alerts Google about your site’s existence |

| Create an XML Sitemap | Generate a roadmap of your site’s structure | Helps Google understand and navigate content |

| Create a Robots.txt File | Guide search engine crawlers on what to index | Prevents indexing of unnecessary pages |

| Build Quality Backlinks | Earn links from authoritative sites | Improves site credibility and crawl priority |

| Use Blog Directories | Submit your site to reputable directories | Increases exposure and initial indexing speed |

How Does Google Crawl and Index Your Pages?

Before going into the steps, one should know how Google discovers and indexes your website.

- Crawling: Google crawls your website by sending its bots, known as Google bots. Crawlers discover new pages, update old ones, and collect data for Google’s database.

- Indexing: After crawling a page, Google will analyze the content, structure, and keywords of the page to determine its relevance and store it in the Google Index.

- Ranking: After indexing, Google ranks your page based on factors like relevance, quality, and user intent.

If your pages aren’t indexed, they can’t appear in search results. Follow these steps to ensure your site gets indexed quickly and effectively.

1. Submit Your Website to Google

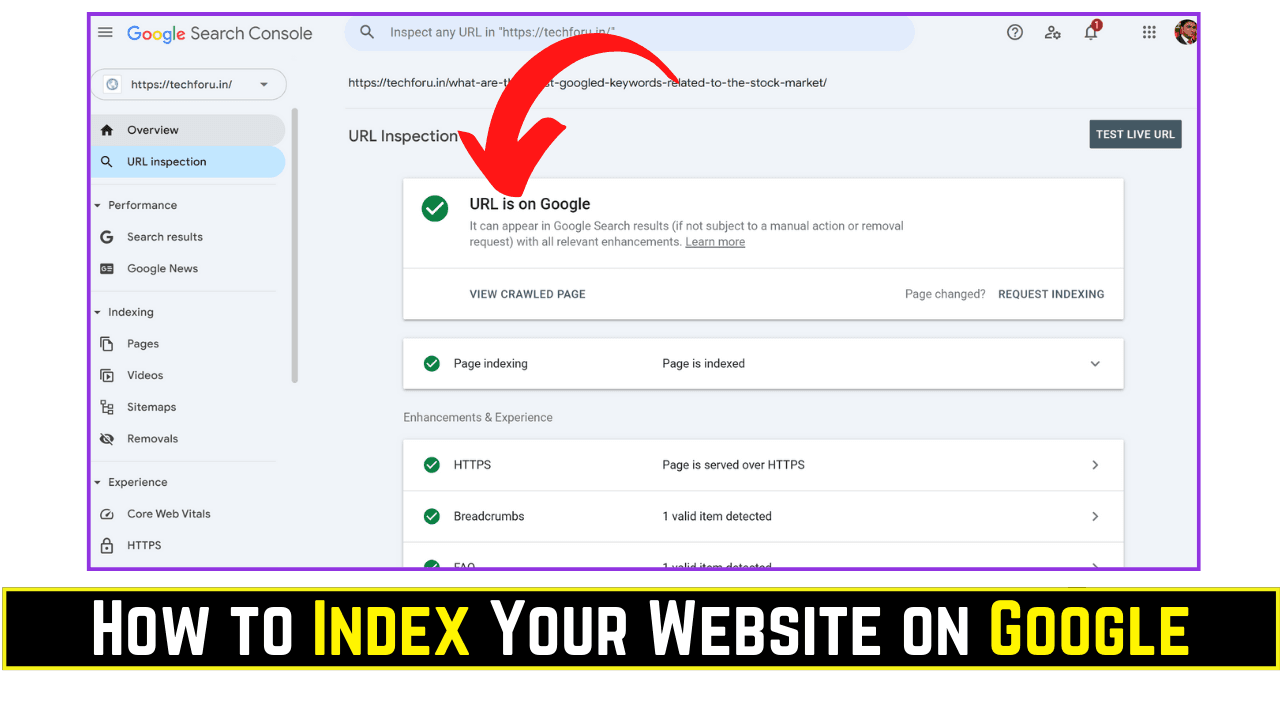

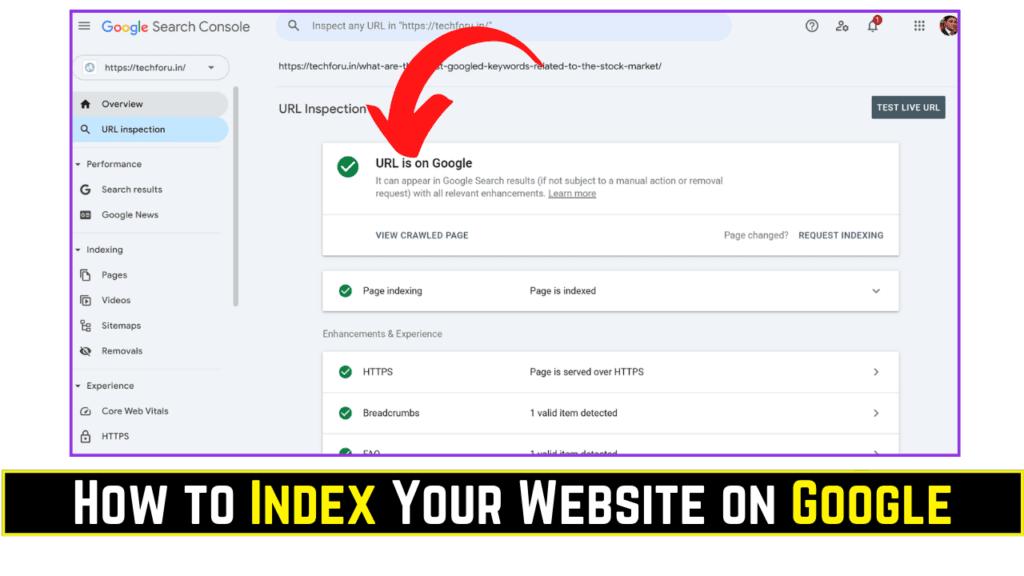

You can directly inform Google that your website exists using the Google Search Console. This is how you can ensure that Google starts crawling your site as soon as possible.

Steps to Submit Your Website to Google:

- Go to Google Search Console and verify your domain ownership.

- Use the URL Inspection Tool to request indexing for individual pages.

- Monitor the Coverage section to identify and fix indexing issues.

2. Create an XML Sitemap and Submit It to Google

An XML sitemap is a file that lists all the important pages on your website, which acts as a roadmap for search engine crawlers. A sitemap will ensure that Googlebots easily find all your site’s pages, especially those hidden deep within your site’s structure.

Steps to create an XML Sitemap and Submit It to Google:

- Generate an XML sitemap using tools like Yoast SEO, Screaming Frog, or XML-Sitemaps.com.

- Submit the sitemap to Google via Google Search Console:

- Navigate to the Sitemaps section.

- Enter your sitemap URL (e.g.,

https://example.com/sitemap.xml) and click Submit.

3. Create a Robots.txt File

A robots.txt file gives instructions to search engine crawlers on which parts of your site should or shouldn’t be indexed. A well-configured robots.txt file ensures Google focuses on indexing valuable content while ignoring irrelevant or duplicate pages.

Steps to Create a Robots.txt File:

- Create a robots.txt file at the root of your website (e.g.,

https://example.com/robots.txt). - Use directives like:

User-agent: *to apply rules to all crawlers.Disallow: /admin/to block specific sections.

- Test your robots.txt file using the Google Search Console robots.txt tester.

4. Build Some Quality Backlinks

It is a signal to Google that your site is legitimate and worth crawling more frequently. Quality backlinks improve the authority of your website and help Google recognize pages faster.

Steps to Build Some Quality Backlinks:

- Create high-quality content that naturally attracts links.

- Reach out to bloggers, influencers, or industry sites for guest posting opportunities.

- Share your content on social media to encourage shares and links.

5. Use Blog Directories to Submit Your Site

Submission of your website to good blog directories increases visibility and provides the first set of backlinks that will help in indexing. Directories improve the visibility of your site and provide quality backlinks, which speed up the indexing process.

Steps:

- Find credible directories like AllTop, Blogarama, or Best of the Web.

- Submit your site with accurate descriptions and keywords.

- Avoid spammy directories that could harm your SEO.

Tips to Ensure Faster Indexing

- Keep content updated: Fresh content invites Google to crawl your website more often.

- Fix crawl errors: Make use of Google Search Console and identify and resolve the issues that prevent indexing.

- Optimize page load speed: Loading pages faster enhances the experience of users and increases efficiency in crawling.

- Make mobile-friendly: Mobile-first indexing means your website has to be optimized for mobile devices.

Conclusion

The indexation of your site on Google is a highly critical step for the establishment of online visibility and organic attraction of traffic. Ensuring effective discovery and ranking by Google by submitting your site to the search engine, making an XML sitemap, and creating quality backlinks will help you achieve online success.

Remember, indexing is just the starting point. To maintain visibility and rank higher, you need to continuously optimize your site for SEO, produce quality content, and monitor your performance. With the right strategies, your website will thrive in the competitive digital landscape of 2025.

Frequently Asked Questions (FAQs)

How long does it take for Google to index my website?

It typically takes a few days to a few weeks, depending on your site’s quality and activity.

Do I need a sitemap for a small website?

Yes, a sitemap helps ensure all your pages are discovered, regardless of your site’s size.

Can I force Google to index my site faster?

While you can’t force it, submitting your site via Search Console and building backlinks can accelerate the process.

What happens if I block pages in robots.txt?

Those pages won’t be crawled or indexed, which is useful for admin panels or duplicate content.

Are blog directories still relevant in 2025?

Yes, credible directories can provide initial exposure and backlinks but avoid spammy ones.